15 Things to Try When Your Website Traffic Has Flatlined

It’s always something of a shock when you log in to your analytics of choice, – Google Analytics, Raven Tools, FoxMetrics, Clicky, whatever – only to find the traffic listed on your site is more or less a straight line at the bottom.

Maybe it’s just dropped suddenly, maybe it’s as low as the horizon stretching back days. Maybe worse, if you don’t check your analytics very often.

In any case, it’s a terrible feeling. Once you get beyond the shock, you leap into action, trying to determine why your traffic has flatlined, but it’s a haphazard process. Rather than poke around hoping to find a clue, follow this list and systematically check the possible options.

If one of these fifteen isn’t the cause, at least you’ll have eliminated all of the obvious solutions.

SEO and Google Update Issues

These issues are typically caused by your content in some way or another. They’re all related to links, content, keywords, and quality. You’ll want to check your content, your keywords, your link destinations, your link anchor text, and other signs of bad SEO. There are more than just these five causes, but anything else is more likely to be a gradual decline rather than a sudden drop in traffic.

1. Did Google Update Their Algorithm?

Google changes and updates their complex search ranking algorithm quite regularly, and most major updates make the news in some way or another. The thing is, the “news” in this case is sites like Moz, Search Engine Journal, and other SEO blogs. You won’t see it on CNN or Entrepreneur, unless it’s an incredibly major update like the introduction of Panda back in 2011.

One thing you can check is when specifically your traffic dropped. You can identify a specific date, and cross-reference that date with the Google Algorithm Change History, found here and maintained by Moz.

Remember that these are the major updates and changes. Minor changes that can affect your site but aren’t widespread throughout the Internet won’t be listed. Often, these have something to do with your site and how it fits within the existing rules, rather than those rules changing.

2. Is Your Recent Content Up to Par?

Sometimes, something in the new content you’ve been publishing has tripped a warning and Google has penalize you. Most of the time, this will be persistent bad links, low quality content, or hidden content. Some of these have some overlap with a hacked site, but I’ll talk more about that later.

For now, do an audit of the last few months of content. Look at the links you’ve been posting. Do they all go to similar low quality sites? Do they go to sites you don’t think you should be linking out to? This can happen particularly if you’re outsourcing all your writing to a ghostwriter, and that ghostwriter has an ulterior motive. It can also happen with guest posts.

Ideally, content should be at bare minimum 500 words, ideally at least 1,000. It should contain quality information; Google knows how to separate fluff from value. It shouldn’t be stolen from another source or spun, though the latter is kind of hard to identify. All links should have destinations you consider good quality and relevant to the discussion.

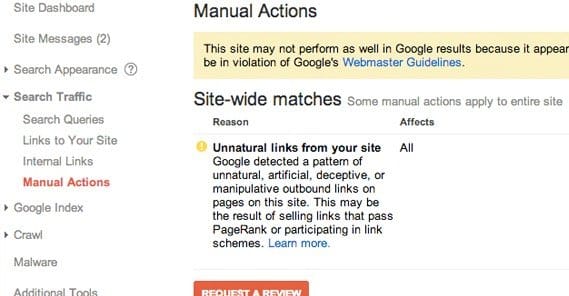

3. Is Webmaster Tools Warning You of Anything?

Google’s Webmaster Tools will warn you if you’re triggering one of their penalties. This only includes real penalties, like a hacked site or a webspam penalty, not algorithmic adjustments like Panda or Penguin. There are two types of penalties; site-wide and partial match. Partial matches only apply to certain pages.

There are a number of different manual actions, which you can read more about here. I’ll summarize:

- Unnatural Links. There are three types of unnatural links penalties. One is bad links pointing at specific pages on your site, and one applies to your whole site. You can diagnose either by using a tool like Ahrefs to pull your backlink profile, after which you can disavow the worst links to get rid of them. The third is links on your site pointing to spam sites.

- Hacked Site. More on this in a later section.

- User-Generated Spam. If you have active comments but have no moderation or spam-filter plugins, spam comments can become a problem. Just remove them and you’ll be fine.

- Spammy Freehosts. This is a penalty that comes with using a web hosting service that sucks. If your web host is adding ads to your page, get rid of them.

- Thin or Valueless Content. This is similar to a Panda penalty, and you can fix it with a content audit.

- Cloaking and Redirects. Normal URL redirects are fine; redirecting users to one page and search engines to another is not.

- Pure Spam. This is a bad penalty that means Google has determined there is nothing redeeming about your site and has nuked it from the index entirely.

- Hidden Text. This is a spam technique typically involving keywords, and means you need to figure out where text is hidden on your site.

4. Did You Change Domain Name?

When you do a site migration from one domain to another, such as in a rebranding, it can be very detrimental to your SEO. If you didn’t properly redirect from the old URLs to the new, Google considers them entirely new pages and thus starts you with nothing.

There are a few cases where this can happen. If, for example, you changed the URL scheme for your blog to include the publication date for each post, or you changed it from a example.com/18848481/ URL to one more like example.com/title-of-the-post/, it’s an entirely new URL with a new page attached.

There’s one other situation where it can happen, and it’s a lot more insidious; the inconsistency and difference between a page with http://example.com and http://www.example.com. Technically, each of those is a different page, and it can cause SEO errors to not be consistent in your implementation between them. This is admittedly a much rarer and less detrimental error, however, so it’s more likely a major change is the culprit.

5. Did Your Backlink Profile Catch Up?

Sometimes, actions you took in the past have repercussions in the future. Most commonly, this is a content issue, but we’ve already looked at content. Links are the other major culprit, and when a link penalty hits, it can sometimes be difficult to tell.

The first thing you’re going to want to do is pull a list of all the links pointing at your site with a tool like Ahrefs. You’ll also want to pull a list of all the links on your site, their anchor text and their destinations. From there, you have to start the long process of analyzing the sites at the other end of the links to determine if they’re worth linking to, or if the link coming in is valuable.

Links on your site should fall into one of three categories. The first are the links that point to unrelated pages, spam pages, or otherwise valueless pages. These links should be removed. The second are links that point to low quality pages or even middling quality pages, but not pages you really want to support. These links can stay, but should be nofollowed. The third are links that go to high quality resources and very relevant pages; they can stay and be nofollowed or dofollowed if you like, up to preference.

Analytics and Data Reporting Issues

These are issues that come up with your reporting software, usually Google Analytics. If you don’t know what you’re doing, or you make a simple mistake, or something breaks in the analytics code you’re using, your traffic numbers can disappear. If your traffic flatlined but your conversions haven’t changed, that’s a sign that your data is off.

6. Did You Redesign Your Site?

A site redesign can throw a wrench into the works when you’re looking at your data, for two reasons.

One, it can mess with your analytics tracking code, which will cause it to display incorrect data. Two, it might not be present on new pages if those pages weren’t created with a proper template, meaning data for those pages isn’t tracked. And, of course, if you changed your site significantly, it can affect your actual traffic, causing users to question where they landed and making them bounce away.

7. Is a Holiday or Seasonal Event Skewing Your Data?

This is something I’ve seen somewhat frequently; a business owner looks at their analytics and sees a steep drop in traffic, and goes into overdrive trying to figure out what caused it. In that frenzy, they forget to look at some of the more obvious answers. Is it the 4th of July, or Christmas, or another major holiday? People probably aren’t online looking at your business page on a major holiday, particularly if you’re not promoting a holiday event, so your traffic is liable to drop.

The alternative is if you were promoting a holiday, like deals for Black Friday or a one-day-only event on another holiday, and that day was yesterday. You would have a spike in traffic for the day of the deal, and today would be back to your normal traffic. Zoomed in to a 24-hour view, it looks like your traffic suddenly dropped, when really it was just abnormally high the day before.

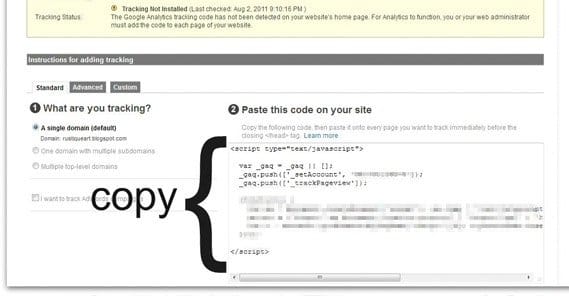

8. Is Your Analytics Code Broken or Incorrect?

Here’s one thing you can check; is the code you paste in for Google Analytics – or whatever other analytics program you use – intact and in the right place? If it’s broken, not loading, or missing, your traffic data won’t be counted. You’ll still be able to access your dashboard, but it will look as though your traffic dropped off a cliff.

Another possible issue with analytics tracking code is having an incorrect website ID. Google doesn’t go by your username, account name, or website name; they go by the unique ID assigned to you when you register. If that ID has changed, or if it was typo’d in your code, you’ll have errors in data reporting as well. Granted, if you haven’t changed your code recently, it won’t explain a sudden drop in traffic, but if you did make a change that can be one possible cause.

9. Are You Coming Down Off a Spike in Traffic?

Did a post you made go viral? Did you start a new ad campaign? Did you do something at a public event or publicity stunt that gave you a surge in visibility?

All of these can cause your traffic to shoot up for a few days or weeks, but that traffic will gradually go away. In the case of viral traffic, it’s often little more than a brief spike with very little residual traffic. The people who follow viral trends just move on to the next trend, they don’t stick around on the site that originated it.

Again, this is a problem with the way you’re looking at your data; you’re not zoomed out enough, temporally, to get context for the drop in traffic. You’ll need to put everything in context and see whether your low traffic is actually low or just normal after a surge.

10. Is Your Analytics Report Filtered Incorrectly?

Another possible cause is filtering in your Google Analytics reporting. If you’re used to seeing certain numbers, and the numbers you’re seeing are much lower, you might be just looking at one particular traffic source out of many. If you’re used to looking at total traffic, for example, seeing just your mobile traffic will be a shock. This happens occasionally if you’re working with a team and someone changes your dashboard settings, so the reports you generate don’t contain all of the data you’re used to seeing. Check for filters and data segmentation before you panic.

Technical Issues

These are issues with outside attacks, glitches in the Internet, problems with hosting, and problems with site files. They can all cause problems with actual traffic coming in, rather than from data being reported, and thus they are all problems you need to solve as soon as possible.

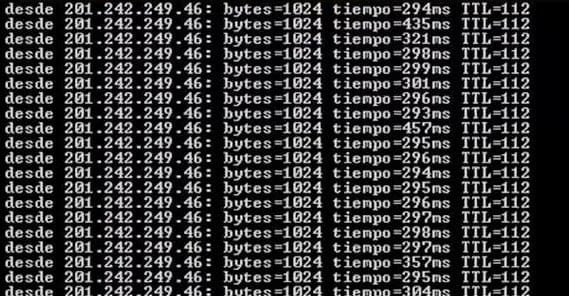

11. Are You Experiencing A DDoS Attack?

A Distributed Denial of Service attack is a common route hackers use to shut down a site. They essentially send requests to load data from your website servers at a rate of thousands per second, overloading your web host and making it impossible for a legitimate user to load your page.

While it might sound like this would cause a spike in traffic, Google recognizes and filters bot and fake traffic automatically in reporting. Thus, when you look at your data, you’ll see that no one is visiting your site. This is accurate because they can’t; your servers are locked up trying to provide data to hackers spamming the database with queries.

There’s not a lot you can do about a DDoS, short of hiring a third party company to intercept and filter your traffic, or working with your web host to block IPs and boost server capacity. In the mean time, your site is functionally down, and you’re losing readers and money. It’s a bad situation to be in, which means it’s a good thing it doesn’t happen often.

12. Did Your Domain Name or Hosting Expire?

I’ve seen this a few times; the only indication you have of your information expiring is the sudden drop of traffic, because you don’t think to check your site every day. This happens particularly with businesses that do most of their business offline, and only use their websites as portals to the occasional new customer.

Check your domain and your hosting contracts. With your domain name, registration may have expired and you will have to purchase it again. If you’re very, very unlikely, someone bought it out from under you and you will have to buy it back, likely for more than you would have paid normally. This is rare, though, unless you’re a big name business.

For hosting, you’ll need to talk to your hosting company about renewing your site. Chances are they won’t have removed your data because temporary lapses in information do happen, and they don’t want to piss off customers by deleting websites the second a bill isn’t paid.

While you’re at it, check your payment information for both services to make sure your autobilling is accurate. If they’re trying to bill an expired card, it can cause issues down the road.

13. Did Your SSL Certificate Expire?

This is a similar issue to the above, but rather than taking your site down completely, it just causes web browsers to warn users about the site not being secure and its certificate being invalid. They can add a security exception to browse, but many will be turned away out of fear of hacking. You will need to refresh your security certificate in order to remove that warning. It’s a simple process, and a quick fix, at least.

14. Was Your Site Hacked?

There are generally three types of site hacking. The first type won’t kill your SEO, but it can kill your business; it’s when a hacker compromises your site and steals data without doing anything else. Since it doesn’t nuke your traffic, I’m glossing over it.

The second kind of hacking is the big, obvious hacking. If your site was taken down and replaced by a pro-ISIS propaganda message, that’s a big hacking. Google will recognize it as a hack and stops people from visiting, and it means you have to take the time to fix everything before you can get your traffic back.

The third kind is more subtle and insidious; a hacker compromises your site and, rather than destroying your site, they just add hidden text and links to your code. Your site looks unchanged, but it’s passing link value to the site of the hacker’s choice. That is, until Google discovers and penalizes your site for hidden text you didn’t know was there.

Thankfully, Google has a very robust guide for fixing a hacked site, which you can find and follow here.

15. Did Your Robots.txt File Change?

Finally, you have issues with your robots.txt file. A robots.txt file is a basic file in the root directory of your site. It governs search engine robots and other bots accessing your site. If you accidentally added a line to block them from viewing your site, you would be blocking Google entirely, and that would destroy your traffic. Check to see if a line like “User-agent: * Disallow: /” exists. If so, you’re blocking all robots, including Google. Remove it and your traffic should come back.

ContentPowered.com

ContentPowered.com