How to Redesign a Website Without Hurting Your Traffic

Any time someone redesigns their website, they risk doing terrible damage to their search ranking. Part of the problem is that they ignore what goes in to internet marketing. They’re outsourcing to some third party SEO professional, after all; it’s their responsibility to handle SEO.

The problem is, a lot of what goes into SEO comes from elements of site design. If you change your site and you mess with those elements in a detrimental way, you lose out on a ton of search value. So what are those elements, and what should you keep an eye on if you want to maintain your search presence through a redesign?

Crawl Your Current Site for Data Verification

The very first thing you have to do when you’re starting up a site redesign is index your current site. There are a bunch of ways you can do this, but the easiest is to nab yourself a copy of Screaming Frog and start harvesting data. You’ll want to create a spreadsheet that shows the URLs of every page, the titles, H1 tags, meta data, canonicalization, internal links, image alt text, and whatever else is relevant. There’s a ton of data you can grab with Screaming Frog, and it’s worth it to have as much as possible with this starting phase.

You’ll also want to make note of some potential SEO problems while you’re running this scan. Flag any pages that have missing page titles, page titles that duplicate other pages, page titles that are overly long or short, pages with missing meta information, pages with broken links, pages with duplicate content, and other such data.

This information will be used later, to fix some of these mistakes in your redesign, since you have everything open for maintenance as it is. With luck, your redesign will result in a higher search ranking rather than a lower one.

Don’t Change URL Structure

It’s almost always a bad idea to change your URL structure throughout your whole site in a redesign. The problem stems from how Google indexes content and assigns value. Think of your page URL like your drivers license number. It’s more or less a permanent identifier of who you are, unique, but can be changed if it’s really necessary, like you moved to another state and need a new ID. That change, though, will mean any documentation that used the old number is now incorrect.

Google works the same way. All the value of a page is connected to the URL. If you change the URL, as far as Google is concerned, it’s a new page. Worse, it might be a page that now is duplicating the content of an existing page. If you don’t tell Google you changed the URL, they might think you’re stealing content or duplicating it from yourself.

If You Change URLs, Redirect Old Pages

Now, there are some reasons why you might want to change your URL. One of them is a rebranding, forcing you to change your root domain. If everything is going from www.example.com to www.exampletwo.com, that change tells Google it’s all new pages, unless you implement 1:1 redirects. Each old page should redirect to each new page. Additionally, you should tell Google via webmaster tools that you changed domains. You can read more about the domain chance process here.

Another case might be when you have a lot of old www.example.com/blog/11251997/38384774302.asp links in on your site, or similarly obtuse non-readable links. You might want to change them all to www.example.com/blog/this-awesome-blog-post-title. Doing so changes the URL, and that becomes an issue. This time all you need to do is make the proper 1:1 redirects.

Make sure you’re always using 301 redirects when you redirect an old URL to a new one. Avoid redirecting URLs to pages where the content has changed significantly, unless you have a very good reason. In general, old page to new page is the proper format, with the content and meta information maintained.

Don’t Remove Old Content without Checking Its Value

In general, your old content is still valuable to keep around, as long as it isn’t thin or duplicate or spam. I’m assuming that by now, in 2016, any content still lingering on your live site has passed the minimum standards set by algorithmic updates like Panda. After all, you’re interested enough in SEO to be reading this post; if you haven’t done something as basic as a content audit by now, you really have bigger fish to fry.

Old content, even if it isn’t actively getting you traffic, is still valuable to keep around. We don’t burn books in a library just because they haven’t been checked out in a few months, after all. That content represents a resource that exists if someone chooses to come looking. It also sits as the landing page for any number of existing links. On top of all of that, it’s proof that you’ve been writing quality content since the date of posting, which is a minor signal of value for your site.

In general, you should avoid removing old content unless it is somehow actively hurting you. If it’s bad enough that it’s not getting any traffic, well, who cares? If it’s not getting any traffic, no one is seeing it anyways. It’s not as if a few blog posts are taking up a ton of space in your web host, either.

Maintain Meta Information

For the most part, you want to maintain your existing meta information from your old pages in your new pages. This means any meta titles, meta descriptions, H1 tags – assuming you don’t have more than one per page – image alt text, canonicalization, and link attributes.

This is the point where you can implement fixes for missing or duplicate meta information that you found in your audit. Any time a page lacks a title or a description, write one. Even if no one is actively browsing the page, Google’s crawlers are, and they assign value to that information.

You can also implement extra meta structure, like structured data through Schema.org. That alone can be a boost in rankings, though it can be tedious to implement if your site is sufficiently large.

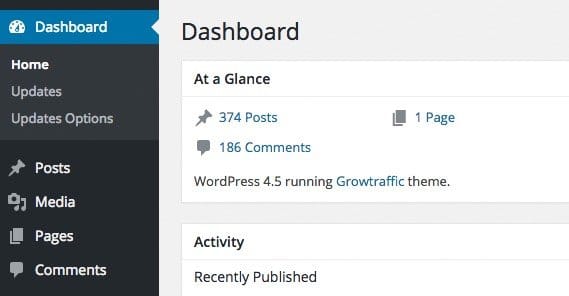

Build and Test Your New Site NoIndexed

As you’re constructing your new design, you should be testing it in an environment that at minimum simulates being on a live server. You can do this by actually uploading it to your live server if you want, but you will have to change the URL for testing. You might have your site at www.example.com, and your testing site with every URL replaced with www.example.com/testing/. The benefit to this is that you can test it live with your CMS and your host. The downside is that it can cause issues if Google indexes it.

In order to prevent this from happening, you’re going to need to temporarily noindex your new design test files in some way. The easiest way is to edit your robots.txt file to block the entire /test/ subfolder. If you’re using a CMS like Magento or WordPress, there are generally specific instructions for each. This is how you do it with Magento, for example.

Alternatively, you can run everything on a virtual server test environment. You don’t need to worry about noindexing everything, because you’re running it on a fake server. This will also be faster to test, because the files are all local, you don’t need to upload to a web server. On the other hand, it’s more complicated to set up, and it won’t find issues that might stem from the way your web host handles certain elements of web hosting.

Verify Operational Site Structure and Content Prior to Replacing

One of the main things you need to do with your site’s new design is make sure everything works. Make sure your site structure and navigation are all functional. If you did something like add breadcrumbs to your navigation, make sure they’re uniformly present, that they don’t get into recursive loops if they’re dynamic, and that they work.

One of the worst things you could do when uploading a new site is upload one that’s broken. You have to scramble to fix it – and there will be minor bugs to fix regardless, so don’t do one of those “push it live at 6pm Friday then go home” deals – and it might take a while to fix.

If you upload a broken site and that’s what Google indexes, you’re going to take a massive hit to your SEO. This hit might last for a few hours, or it might last a few days, depending on how quickly you fix the problems and how soon Google goes back and indexes it again. Ideally, you’ll be able to ping Google when you’ve fixed the issues, but they aren’t always quick to respond.

Make Sure Your New Design Works for Mobile Devices

If you’ve been following this post like a checklist, taking it an item at a time, this one probably comes a little late in the order. One of the more recent and more important search ranking signals today is mobile compatibility.

Google has recognized that an increasing amount of web traffic is taking place via mobile devices, be they tablets, smartphones, e-readers, or more exotic devices. They’ve decided to back this trend by making mobile compatibility a search ranking factor. It’s very, very minor for desktop searches, your ranking there won’t change much, but for mobile traffic it’s the difference between being listed on page 20 and being listed on page 1.

The main reason I bring this up is because, at this point, there’s no excuse not to have a mobile responsive page. The number one excuse I’ve heard for not implementing responsive design is “we’ll do it in our next site redesign.” Well, guess what buddy? This is that redesign, and it’s time to put your cards on the table. Implement a responsive design or suffer from your new site lacking a critical feature.

Make Sure Your New Design is Faster than Your Old Design

Another search ranking factor that is important is making sure your new design is speedy. It’s one thing to update the functionality with a bunch of fancy scripts, but if those scripts make your site load 3x slower, they’re going to tank your search ranking. You need to make a choice between style and speed, sometimes.

Thankfully, there are ways you can keep your fancy scripts and dynamic content generation and whatever else you want, while still having a fast site. You can offload your scripts onto a content delivery network that specializes in off-site scripting. You can delay or asynchronously load them so that the content shows up before all your fancy navigation, so users can start to actually use your site.

If your site is noticeably slower, talk to your developers and raise concerns. Site speed is an important factor for both search ranking and user experience. Don’t neglect it.

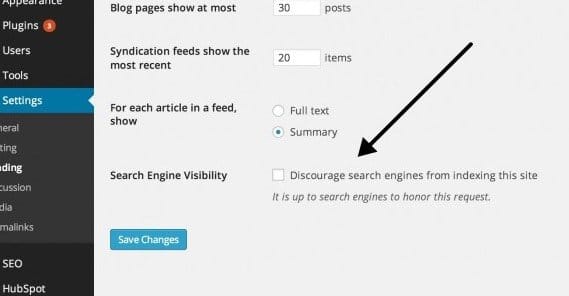

Make Sure to Remove the NoIndex Tags Prior to Going Live

This one shouldn’t have to be said, but now and then I encounter someone frantic about how they just implemented a site redesign and now their site has been removed from the index. They’re frantically auditing, looking for content issues, looking for broken redirects, looking for anything that might be wrong. As it turns out, they forgot to remove the noindex tag when they went live, and Google just saw their site drop off the face of the earth. As soon as they removed it, their rankings more or less came back.

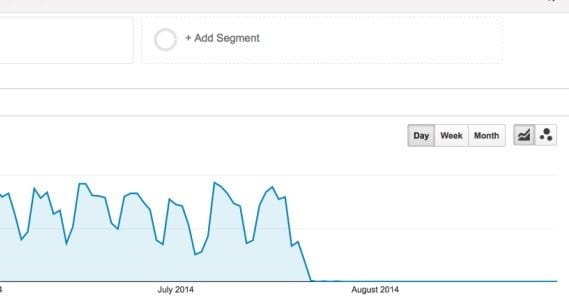

Monitor Ranking Per Page Ongoing

Once you’re live and you removed the “noindex” from your site, do a search ranking crawl as soon as Google has indexed the new page. Keep doing this crawl and monitor your search rankings on a per-page basis. You should, ideally, see pages rising over time. Most of your changes were either cosmetic, beneficial functionally, or beneficial from an SEO perspective.

If you see any specific page dropping, it’s time to do some investigation to see why that page is dropping. There are a million possible issues, so just take it as it comes. At the same time, if your whole site is dropping over all, you’ll have to figure out what is actually causing that kind of issue. That’s for troubleshooting, though, not for this implementation process.

ContentPowered.com

ContentPowered.com