Should I Block Bots and Website Crawlers from My Site?

A large portion of the traffic that arrives on your site is going to come from non-human sources, and that’s perfectly fine. If you’re curious to see how much traffic comes from bots, you can find out with Google Analytics these days. This wasn’t always the case, and it’s not 100% accurate, because there are always bots trying to get around bot filtering methods.

Good Bots

A bunch of the bots that visit your site are perfectly benign, even good for your site. For example, the way Google discovers new content online, and the way they tell when a piece of content has changed, is by using the services of a massive swarm of software constantly running. This swarm follows links, jumps from site to site, and indexes new and changed content.

Google bots are complex beings, with a deep set of rules that govern their behavior. For example, the NoFollow command on a link makes that link effectively invisible to a Google bot. They won’t follow that link, and consequently won’t record what’s on the other side. They will also respect the NoIndex command for your whole site, if you want to be invisible to Google entirely.

Every search engine has their bots. Bing – and Yahoo, who shares their index – has a fleet similar to Google’s, running on similar rules. Other search engines have their own, perhaps less sophisticated bots.

Any time you see a search engine that advertises the “dark net” or the invisible web, that search engine is boasting that their web crawlers don’t respect website boundaries and will index content the webmasters don’t necessarily want indexed. This leads to a broader search index with content that won’t show up through Google, but it also leads to a less useful set of search results. After all, many people block files and folders because those files or folders are just basic system files of no use to anyone.

Bad Bots

On the other end of the spectrum, you have the bad bots. Some search bots fall into this category, because they intentionally search out and index content on your site that you don’t want displayed to the public. They ignore robots directives, but they don’t have a way around specific user agent or IP blocks, if you can identify and use them.

The problem with bots that index content that should remain hidden is that it opens the door for hackers who might use access to those files in order to compromise your system. They can look for specific versions of specific files indexed on these darknet searches, and they can target your site if it’s out of date.

There are also spam bots. These bots search your sites for comments fields and contact forms. They fill out these fields with a predefined message, usually some kind of affiliate link, spam link, or ad. There’s an ongoing arms race between the developers of spam bots and the anti-spam activists, who produce plugins like Akismet. The idea is to recognize the content and the IP of the bots and block them before they can litter your blog with spam.

To go into a more dangerous alley, you also have the botnet bots. These are virus-ridden computers that have been slaved to the whims of a hacker, who often will sell time on their botnet to other lesser hackers. Botnets are the leading cause of DDOS attacks, also known as denial of service attacks. Whenever you hear about a major site being taken down, it’s probably a botnet at work. Blocking these bots is difficult because they come from normal computers, which just happen to be infected with malware.

Botnets also often open up security exploits. When a server or system infrastructure is subjected to that much stress, it tends to buckle. Depending on how it buckles, it might process data in a way it shouldn’t, which opens the server up to a malicious attack.

Should You Block Bots?

Now, to return to the original question, you can see that it’s not likely that you should block every bot. Blocking, say, the Googlebot, just means that your site will be removed from the search index and you’ll lose all of your organic traffic. This is on par with being deindexed as a penalty, only you do it to yourself.

On the other hand, you definitely want to block spambots. You want to protect yourself from DDOSing as well, but that’s significantly harder and can’t be done in the same ways you might use to block bots.

Fighting Bad Bots

There are a few ways to block and combat bad bots.

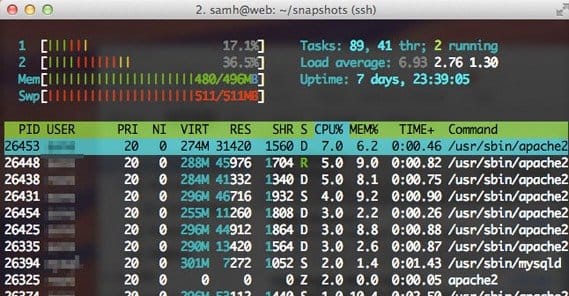

First, you can implement robots.txt directives. Robots.txt is a file that bots read, and then if it has relevant commands, they obey it. You can block all bots from an entire site, bots from specific pages, or even bots from following specific links. You can also block individual bots, if you know their source IP. You can read more about that here.

The worst bots won’t care about your robots.txt file, which is unfortunate. It means you’ll have to use a more stringent, but harder to implement, method to block them. This involves editing a server file called HTAccess, and adding in bot IP addresses to deny access. It’s fairly complicated and easy to do wrong, so I recommend reading up on it.

For spam bots, you can implement the best Captcha iteration available, which changes from time to time. As mentioned above, there’s an ongoing arms race with spambots, and Captcha is not a bystander. Just do a few searches for Captcha Breakers and you’ll see what I mean. Even the most advanced Captcha technology will be broken before long and will enable spambots to enter, if it’s your only line of defense.

As a final note, if you want a list of bots you can block, they exist. This one is the most comprehensive bot list online, but it comes with a steep price, literally. If you’re not a member of the association, access to this list can cost $14,000. Needless to say, it’s also insanely long and not practical to use for blocking bots manually.

ContentPowered.com

ContentPowered.com